Stories

It is difficult to maintain a grip on a spinning world. As the world recently began shifting back from being the longest and sunniest days to an inevitable march into darkness and coldness, I lost my grip for a moment as the velocity changed without me.

It is difficult to maintain a grip on a spinning world. As the world recently began shifting back from being the longest and sunniest days to an inevitable march into darkness and coldness, I lost my grip for a moment as the velocity changed without me.

It is amazing what the ritual morning walk through the park can do. The morning begins by finding your way through the wreckage. Everything that was destroyed by the previous day but couldn’t be salvaged and flushed as part of the evening dream cycles.

It is amazing what the ritual morning walk through the park can do. The morning begins by finding your way through the wreckage. Everything that was destroyed by the previous day but couldn’t be salvaged and flushed as part of the evening dream cycles.

I drove up to Maine and back last week. It was the first time I have driven in over a year. I do not miss it. I have already spent large chunks of time not owning a car or driving in the last decade, but living with a functional public transit in a city that has everything I need while not owning a car has changed me. I do not want to go back. I want to spend the rest of my life not driving if at all possible. I will drive when I must, but I will work as hard as I possibly can to stay out ...

I drove up to Maine and back last week. It was the first time I have driven in over a year. I do not miss it. I have already spent large chunks of time not owning a car or driving in the last decade, but living with a functional public transit in a city that has everything I need while not owning a car has changed me. I do not want to go back. I want to spend the rest of my life not driving if at all possible. I will drive when I must, but I will work as hard as I possibly can to stay out ...

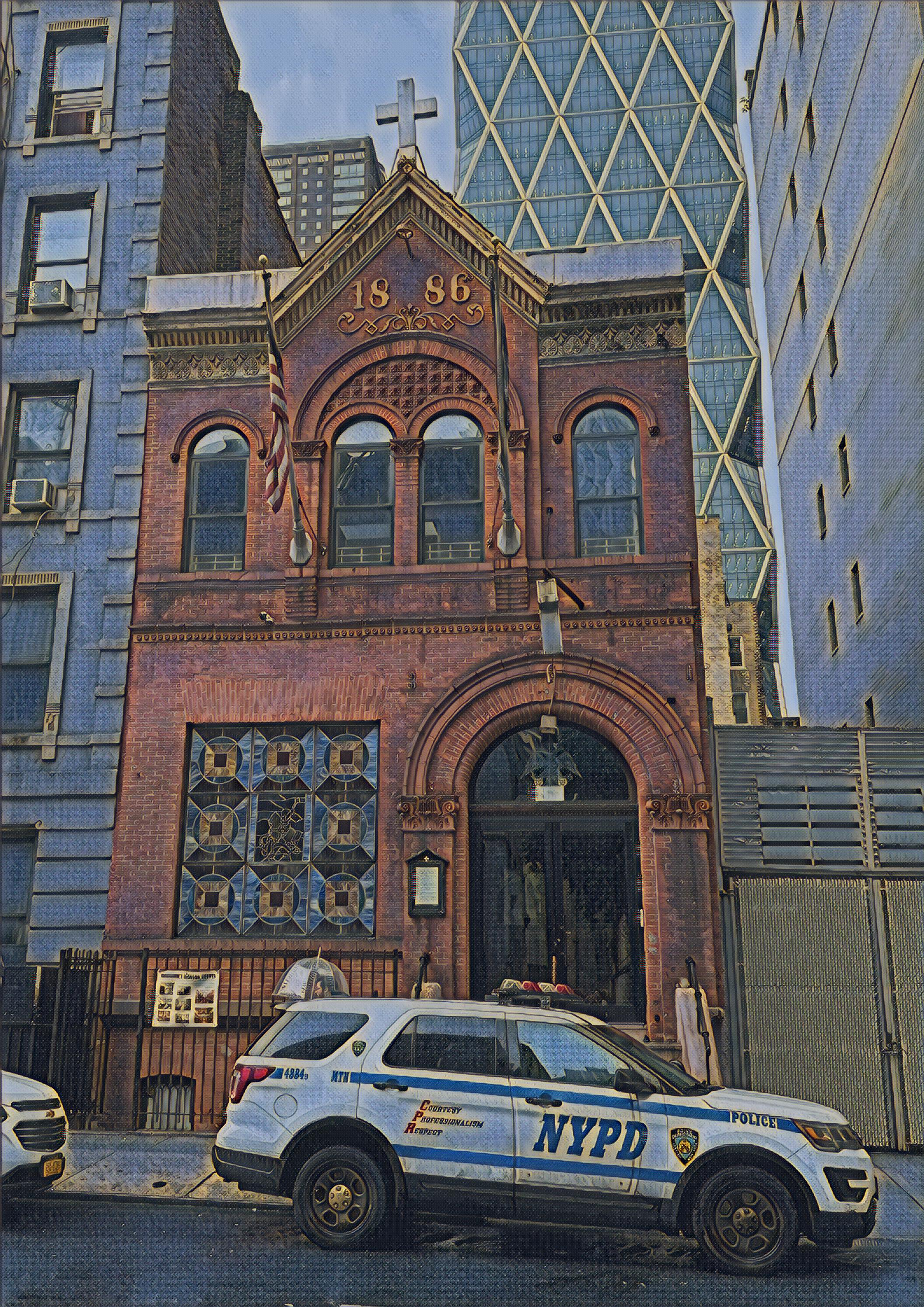

Audrey messaged me with a heads up that there would be a critical mass ride from Union Square to City Hall in protest NYPD Commissioner Jessica Tisch’s Draconian crackdown on e-bikes in NYC. Audrey has ballet Friday evenings, so it was a perfect time for Poppy and I to get out on the bike and show our support for cyclists in NYC. We had already done four loops of Central Park that day, but we were up for the ride down to Union Square in support of the movement.

Audrey messaged me with a heads up that there would be a critical mass ride from Union Square to City Hall in protest NYPD Commissioner Jessica Tisch’s Draconian crackdown on e-bikes in NYC. Audrey has ballet Friday evenings, so it was a perfect time for Poppy and I to get out on the bike and show our support for cyclists in NYC. We had already done four loops of Central Park that day, but we were up for the ride down to Union Square in support of the movement.

I hear a lot of folks shaming us people who are shaming people for using artificial intelligence. There is a lot of concern that if you shame young people, or other people who aren’t familiar with the inner workings of the technology and business surrounding AI, you will do some damage to their confidence and feelings, or I guess the market.

I hear a lot of folks shaming us people who are shaming people for using artificial intelligence. There is a lot of concern that if you shame young people, or other people who aren’t familiar with the inner workings of the technology and business surrounding AI, you will do some damage to their confidence and feelings, or I guess the market.

After have a front seat for the evolution of Internet technolgoy thiscentury, participating in the Silicon Valley hustle, and dabbling in secondary financial markets (Nasdaq, Forge), you realize that artificial intelligence is just crypto, with predictive algorithms and data replacing the complex “verifiable” math of the blockchain. Instead of the core being math, with artificial intelligence, the core can be anything in an AI world. Then you get the same Vegas style casino on top of what...

After have a front seat for the evolution of Internet technolgoy thiscentury, participating in the Silicon Valley hustle, and dabbling in secondary financial markets (Nasdaq, Forge), you realize that artificial intelligence is just crypto, with predictive algorithms and data replacing the complex “verifiable” math of the blockchain. Instead of the core being math, with artificial intelligence, the core can be anything in an AI world. Then you get the same Vegas style casino on top of what...

I finished reading Tripping on Utopia, by Benjamin Breen on Margaret Mead, The Cold War, and the Troubled Birth of Psychedelic Science. It was a good book that was easy to finish. The book filled in my “civilization map” during the period between 1930 and 1970, and connected some interesting dots between the cybernetics world of the 1950s, the Macy Conferences that gave birth to the world of compute and artificial intelligence shapin...

I finished reading Tripping on Utopia, by Benjamin Breen on Margaret Mead, The Cold War, and the Troubled Birth of Psychedelic Science. It was a good book that was easy to finish. The book filled in my “civilization map” during the period between 1930 and 1970, and connected some interesting dots between the cybernetics world of the 1950s, the Macy Conferences that gave birth to the world of compute and artificial intelligence shapin...

I spent two days at API Days in NYC last week talking APIs, and in the lead up to the conference I didn’t do much writing, focusing my energy on my keynote and being present. Coming out of the conference I haven’t done any writing because I don’t have any mojo. It is now a week since the last day of the conference and I am only just now writing anything in my notebook and hitting publish on API Evangelist and Kin Lane. The experience took it out of me.

I spent two days at API Days in NYC last week talking APIs, and in the lead up to the conference I didn’t do much writing, focusing my energy on my keynote and being present. Coming out of the conference I haven’t done any writing because I don’t have any mojo. It is now a week since the last day of the conference and I am only just now writing anything in my notebook and hitting publish on API Evangelist and Kin Lane. The experience took it out of me.

Ed Zitron is on fire most weeks in his newsletter. Some of the time he goes over the top for me, but it is hard to dismiss that he has his finger on the pulse of what is happening right now. He said something in his newsletter this week that caught my attention and reflects what I am seeing at the intersection of API operations and AI hype and hustle which I think sets the tone right now more than any single technological trend.

Ed Zitron is on fire most weeks in his newsletter. Some of the time he goes over the top for me, but it is hard to dismiss that he has his finger on the pulse of what is happening right now. He said something in his newsletter this week that caught my attention and reflects what I am seeing at the intersection of API operations and AI hype and hustle which I think sets the tone right now more than any single technological trend.

The way my brain works leaves me very focused on whatever has the attention of my brain in any given moment. My intense focus on technical details, stories, and other things I find interesting, important, and a priority has led to great success as the API Evangelist, but it is has also caused me a lot of pain over the years. I have to pay extra special attention to keeping my mouth shut and ears open when engaging with people, something that has made dating earlier in my life, and other so...

The way my brain works leaves me very focused on whatever has the attention of my brain in any given moment. My intense focus on technical details, stories, and other things I find interesting, important, and a priority has led to great success as the API Evangelist, but it is has also caused me a lot of pain over the years. I have to pay extra special attention to keeping my mouth shut and ears open when engaging with people, something that has made dating earlier in my life, and other so...

I have learned a lot about myself over a 30+ year career in tech. I started programming in 1983 on a Commodore 64, and had my first job programming in 1988 in Cobol for the software used by schools in Oregon. I now realize more about my personality traits that attracted me to computers, but also the reasons why companies were looking for nerds like me to program computers, and it isn’t just that I knew a certain programming language—it was more about the human things I don’t see than it wa...

I have learned a lot about myself over a 30+ year career in tech. I started programming in 1983 on a Commodore 64, and had my first job programming in 1988 in Cobol for the software used by schools in Oregon. I now realize more about my personality traits that attracted me to computers, but also the reasons why companies were looking for nerds like me to program computers, and it isn’t just that I knew a certain programming language—it was more about the human things I don’t see than it wa...

Growing up as a libertarian, when I heard others saying they are libertarian I’d hear someone who was fed up with the state of politics and separating themselves from the fucked up status quo, but now I just hear someone who hasn’t done any deep reading or have talked to many black and brown folks about how the world works. It is something that pushed me to abandon this portion of my identity between 2010 and 2015.

Growing up as a libertarian, when I heard others saying they are libertarian I’d hear someone who was fed up with the state of politics and separating themselves from the fucked up status quo, but now I just hear someone who hasn’t done any deep reading or have talked to many black and brown folks about how the world works. It is something that pushed me to abandon this portion of my identity between 2010 and 2015.

It is a real challenge to help people who are struggling with an overactive brain understand the importance of reading and writing, but my advice for anyone struggling with mental stability and health in this moment is to turn off the TV and the Internet (hint: they are the same thing), and pick up a book and begin reading. While reading, also begin to write down your thoughts about what you are seeing, thinking, and feeling. If you do this for long enough, you will begin to find your way....

It is a real challenge to help people who are struggling with an overactive brain understand the importance of reading and writing, but my advice for anyone struggling with mental stability and health in this moment is to turn off the TV and the Internet (hint: they are the same thing), and pick up a book and begin reading. While reading, also begin to write down your thoughts about what you are seeing, thinking, and feeling. If you do this for long enough, you will begin to find your way....

I’ve done some research work to help improvise my usage of the word abstraction. I have wildly used the word throughout my 30 year technology career, and was looking to sharpen how I apply the important word. I’ve learned that I came by the slippage in meaning rather ignorantly as part of my computer science indoctrination over the last couple of decades.

I’ve done some research work to help improvise my usage of the word abstraction. I have wildly used the word throughout my 30 year technology career, and was looking to sharpen how I apply the important word. I’ve learned that I came by the slippage in meaning rather ignorantly as part of my computer science indoctrination over the last couple of decades.

All of the fluoride insanity you hear from RFK is stuff I heard growing up in Oregon. It isn’t anything new. However, as I heard a video of RFK stating how you get dumber every bit of fluoride you ingest, I can’t help but think about why they are championing fluoride and not lead, when it literally has the same effect as what they are trying to attach to fluoride.

All of the fluoride insanity you hear from RFK is stuff I heard growing up in Oregon. It isn’t anything new. However, as I heard a video of RFK stating how you get dumber every bit of fluoride you ingest, I can’t help but think about why they are championing fluoride and not lead, when it literally has the same effect as what they are trying to attach to fluoride.

I heard our transportation secretary say our subways are dirty and dangerous. It is something I’ve heard throughout my life and even parroted early on in my adulthood. As someone who now rides the subway as their only means of transportation and is obsessed with how the subway works and doesn’t work, it has become a revealing statement for understanding how people are racist and can’t separate how they feel about people and how cities work.

I heard our transportation secretary say our subways are dirty and dangerous. It is something I’ve heard throughout my life and even parroted early on in my adulthood. As someone who now rides the subway as their only means of transportation and is obsessed with how the subway works and doesn’t work, it has become a revealing statement for understanding how people are racist and can’t separate how they feel about people and how cities work.

I love walking down the streets of New York City and hearing the diverse languages that are spoken. I can spot French, Italian, and occasionally German and Spanish, but I also am hyper aware of the diversity of eastern European and central and south American that I can’t always tell the nuance of with my poor hearing. Every time I catch a conversation in another language I think about the people I grew up with who love to say you have to speak English in the United States.

I love walking down the streets of New York City and hearing the diverse languages that are spoken. I can spot French, Italian, and occasionally German and Spanish, but I also am hyper aware of the diversity of eastern European and central and south American that I can’t always tell the nuance of with my poor hearing. Every time I catch a conversation in another language I think about the people I grew up with who love to say you have to speak English in the United States.

Poppy and I got another flat in the park yesterday. The sidecar wears through the rubber on tire in some ways I don’t fully understand yet and will have to work to dial in. Spots on the tire emerge as it raises up and then come down on the pavement wearing holes across the entire center of tire, but it is something goes much deeper in some areas. As we came off cat hill on the east side I heard a big pop behind me (my hearing sucks), and I quickly felt the familia wobble in the handle bars...

Poppy and I got another flat in the park yesterday. The sidecar wears through the rubber on tire in some ways I don’t fully understand yet and will have to work to dial in. Spots on the tire emerge as it raises up and then come down on the pavement wearing holes across the entire center of tire, but it is something goes much deeper in some areas. As we came off cat hill on the east side I heard a big pop behind me (my hearing sucks), and I quickly felt the familia wobble in the handle bars...

I was recently reading a lot about Stafford Beer a cybernetician from the 1950s, 60s, and 70s. A word he used to describe the world outside of corporations has gotten lodged in my brain this week—-variety. It is a simple word that seems innocuous and simple, but for me expresses in a meaningful way what I like about the very human world we live in. Oftentimes I will call the world messy, chaotic, and unpredictable, but I’d say varie...

I was recently reading a lot about Stafford Beer a cybernetician from the 1950s, 60s, and 70s. A word he used to describe the world outside of corporations has gotten lodged in my brain this week—-variety. It is a simple word that seems innocuous and simple, but for me expresses in a meaningful way what I like about the very human world we live in. Oftentimes I will call the world messy, chaotic, and unpredictable, but I’d say varie...

I wrote about people with ear buds in walking through the world tethered to some indoor environment a while back, but today this week I am fascinated by cops on their cell phones. Both of these situations reflect our unhealthy relationship with technology which from my vantage point is being driven by APIs. I know many refuse to see it, but being on our phones so much ...

I wrote about people with ear buds in walking through the world tethered to some indoor environment a while back, but today this week I am fascinated by cops on their cell phones. Both of these situations reflect our unhealthy relationship with technology which from my vantage point is being driven by APIs. I know many refuse to see it, but being on our phones so much ...

I like to clean my house. Not a simple clean, like deep clean, on your hands scrubbing the floor. It’s weird I know, but I do. I can get into a real zen place with it and I feel more connected to my environment afterwards and my mind (and house) feel less cluttered afterwards. I learned to clean from a lady named Liz when I was 17 until about 21. Liz, and her husband named Eld, would pay me to clean their house, and do chores around their property in exchange for herb.

I like to clean my house. Not a simple clean, like deep clean, on your hands scrubbing the floor. It’s weird I know, but I do. I can get into a real zen place with it and I feel more connected to my environment afterwards and my mind (and house) feel less cluttered afterwards. I learned to clean from a lady named Liz when I was 17 until about 21. Liz, and her husband named Eld, would pay me to clean their house, and do chores around their property in exchange for herb.

The hype around artificial intelligence has been so great that the valuations of the companies producing AI have gone through the roof. Not necessarily just Microsoft, Facebook, Google, and OpenAI, but all the other startups in their shadows. Even the sensible uses of artificial intelligence are over priced and possess unsustainable valuations. This isn’t an industry that takes market humblings in stride, and will shift their focus to where money can be made. One might point the finger at ...

The hype around artificial intelligence has been so great that the valuations of the companies producing AI have gone through the roof. Not necessarily just Microsoft, Facebook, Google, and OpenAI, but all the other startups in their shadows. Even the sensible uses of artificial intelligence are over priced and possess unsustainable valuations. This isn’t an industry that takes market humblings in stride, and will shift their focus to where money can be made. One might point the finger at ...

As we get close to the five year anniversary of Isaiah’s death, and as I continue to lighten my load in these stressful times, I am thinking a lot about the crushing weight of capitalism, patriarchy, white supremacy, and the uncaring nature of technology. When I spent the summer of 2016 out in the woods with Isaiah, I was reminded of the weight of the world on all of us in some profound ways that will...

As we get close to the five year anniversary of Isaiah’s death, and as I continue to lighten my load in these stressful times, I am thinking a lot about the crushing weight of capitalism, patriarchy, white supremacy, and the uncaring nature of technology. When I spent the summer of 2016 out in the woods with Isaiah, I was reminded of the weight of the world on all of us in some profound ways that will...

As I reassess my career as a database and API specialist in this Trumpian age I am thinking deeply about how our computational need to categorize and organize things into databases, so that we can help thend to actually reflect some much more deep seated authoritarian tendencies present in our American society. I have read numerous stories lately about how categorizing and standing up a database and even an API begins from a desire to help a group of people, but then it quickly becomes abo...

As I reassess my career as a database and API specialist in this Trumpian age I am thinking deeply about how our computational need to categorize and organize things into databases, so that we can help thend to actually reflect some much more deep seated authoritarian tendencies present in our American society. I have read numerous stories lately about how categorizing and standing up a database and even an API begins from a desire to help a group of people, but then it quickly becomes abo...

I am drawing all the lines in between me and artificial intelligence to let folks know where I stand. I recently made it clear that I am saying no to artificial intelligence as part of my API Evangelist work, and where I stand with folks feeling...

I am drawing all the lines in between me and artificial intelligence to let folks know where I stand. I recently made it clear that I am saying no to artificial intelligence as part of my API Evangelist work, and where I stand with folks feeling...